I didn't know you could mix and match (comic)

I once took computer vision class. Every algorithm we learned was done on grayscale images, as if it were 1950 and we couldn't afford those new fangled colour VDTs. I put up my hand and asked why we discard all of this lovely colour information. The prof answered that some people have tried it, but it doesn't give much benefit.

But in some situations, colour can be important.

First, we convert to grayscale:

Umm......... I don't think we need to go any further here. 'Nuf said.

Computer vision researchers use two approaches for dealing with colour. The first is to break the image into red, green, and blue images, do the algorithm three times, and combine the results. The second is to treat the RGB values as a vector and subtract the vectors to determine colour difference. These approaches can work, but they don't take into account human perception of colour.

The RGB values in an image have very little to do with how the human eye works. They have more to do with the voltage fired at phosphors in an 80's era CRT display than human vision. (Let's conveniently ignore the fact that LCDs have completely different properties. Everybody else does.)

To compare two colours, you have to first convert them to a different colour space. If you find yourself subtracting RGB values, you're doing it wrong.

In 1915, Albert Munsell created a Colour Atlas, a book of colour paint samples. He came up with a way of logically arranging them that made sense to his eye. Each colour was indexed by hue, saturation, and value (also known as lightness). We still use his system today, and most of modern colour theory is based on Munsell's ideas.

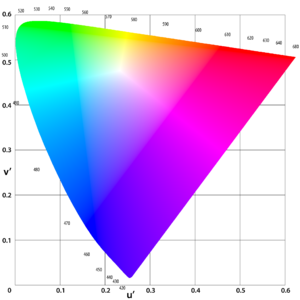

The CIE is an international authority in charge of standardizing things to do with light and colour. In 1960 they created a mathematical arrangement of colour, and improved it in 1975. In this space, the more different two colours are, the farther apart they are in the drawing. The arrangement is not perfect, but the math is simple enough to work with. It's no good to create an international standard that nobody understands.

A nice, easy way to perform edge detection is to use the sobel operator:

Translation: We create two new images, Gx, and Gy. The pixels in the new images get their values from the difference in the values of the pixels around their corresponding position in the old image. In the Gx image, the differences are in the right to left direction. In the Gy image, the differences are in the up and down direction. After the two new images, are created, we combine them into one by adding the pixels together in a special way. We square the values, add them together, and take the square root. The result is the edge-detected image.

I like the sobel algorithm because it is easy to adapt to color difference. Instead of subtracting the pixel gray values, we subtract the two colours using the distance in L*u*v colour space, which is almost perceptually uniform.

I implemented the two algorithms in 400 lines of C code. When the program is run with a given PNG image, it reads it and performs edge detection first on the grayscale version, and then on the colour version using colour differences. In both cases, the images are normalized so that the highest difference is white, and the lowest is black. Here are the results.

Note: The grayscale image appears blurred, and the colour one does not. This is an error in the blog entry and should be corrected later. The implementation blurs both versions before performing edge detection, to reduce noise.

Traditional approaches for colour

Colour Difference

People can distinguish skin colours very well, so we can tell if a potential mate is healthy or not. This is more important than distinguishing different shades of green leaves, which would be mere distractions from the delicious bouquet of fruits hanging in trees. Describing the exact shade of an azure sky is simply not required for survival. We are hypersensitive to differences in yellows, reds, and oranges.

People can distinguish skin colours very well, so we can tell if a potential mate is healthy or not. This is more important than distinguishing different shades of green leaves, which would be mere distractions from the delicious bouquet of fruits hanging in trees. Describing the exact shade of an azure sky is simply not required for survival. We are hypersensitive to differences in yellows, reds, and oranges.

Finding outlines in images

Edge detection is usually the first step in any computer vision algorithm, because it converts an image into a black and white outline that is easier to work with.

Results

Grayscale original

Traditional Edge Detection

Colour Original

Colour Edge Detection

Grayscale original

Traditional Edge Detection

Colour Original

Colour Edge Detection

Further Reading

I don't have my grayscale Sobel implementation built, but I don't think it would be considerably different in the two examples provided. You're initial image (circle on flat background) would have been an interesting example of where grayscale edge detectors would fail completely vs. where your algorithm would work.

A practical, memory efficient way to store and search large sets of words.

A practical, memory efficient way to store and search large sets of words.

Why don't web pages start as fast as this computer from 1984?

Why don't web pages start as fast as this computer from 1984?

If you have to draw something called "UML Sequence Diagrams" for work or school, you already know that it can take hours to get a diagram to look right. Here's a web site that will save you some time.

If you have to draw something called "UML Sequence Diagrams" for work or school, you already know that it can take hours to get a diagram to look right. Here's a web site that will save you some time.

Make sure your lines are sharp using this simple trick.

Make sure your lines are sharp using this simple trick.